What is CDN and how does it work?

CDN (Content Delivery Network) is a network of servers. Those servers are spread across different geographical locations.

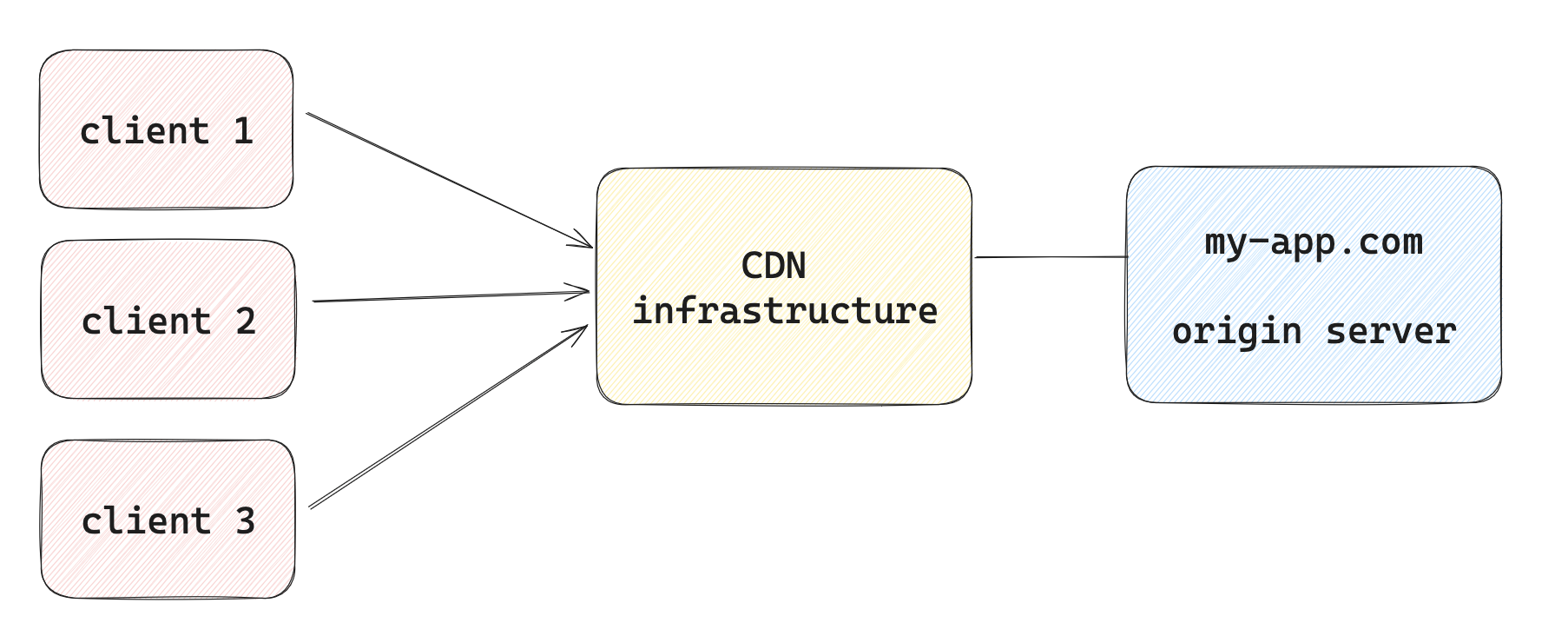

CDN servers act as a middleman between the origin server, where your application is hosted, and the clients who send requests to your server.

Imagine clients are sending requests to your server hosted at my-app.com. In such a scenario, the CDN can handle all these requests directed towards the my-app.com origin.

The CDN is aware of which server hosts the content for the my-app.com domain. Whenever necessary, the CDN can retrieve data from this origin server, so communication goes two ways.

The CDN works invisibly, so neither the client nor the origin server needs to know it's there.

This means that when a client opens a URL my-app.com/*, they don't need to worry about what type of server is making that request. Similarly, the origin server handles all valid requests without concern for who made them.

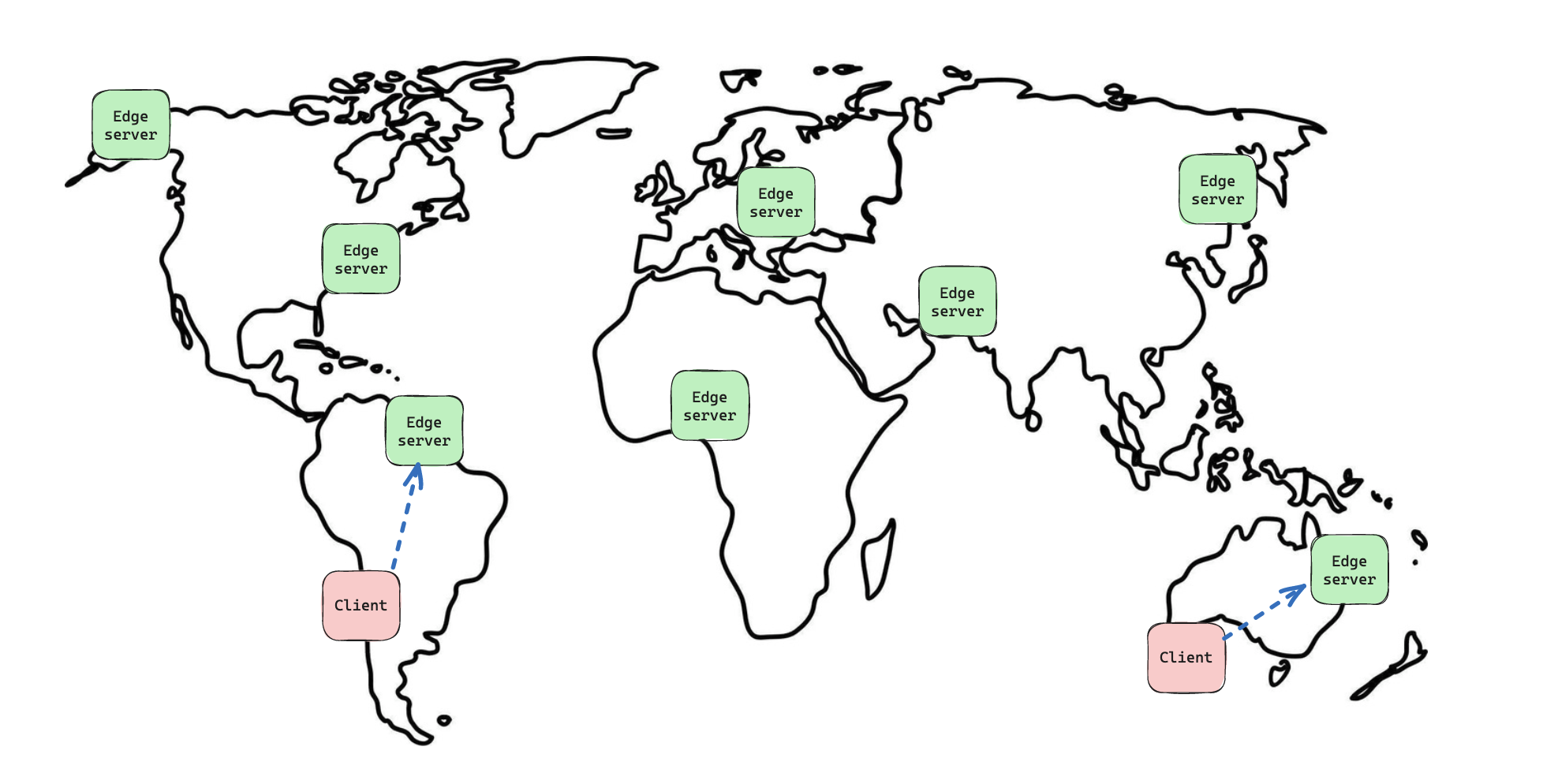

The geographically scattered servers within a CDN are known as Edge servers and they look like this:

Each request sent to a CDN is handled by the Edge server nearest to the requesting client's location.

Because the Edge server is physically closer to the client, responses served directly from it can be quicker. This is because shorter physical distances result in lower latency and faster response times. Network latency is how long it takes for a data packet to travel from one spot to another.

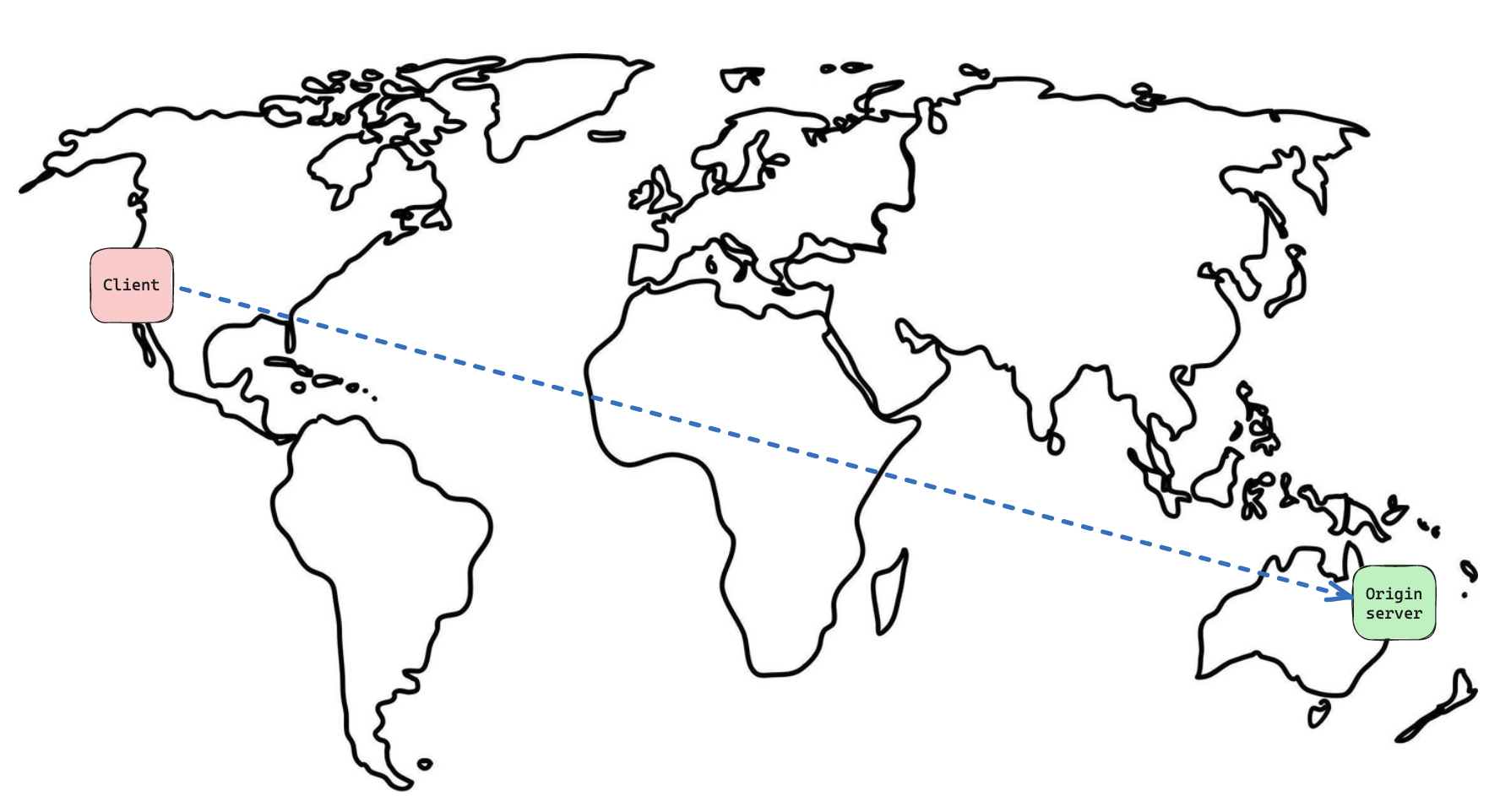

Without a CDN, the process appears as follows:

CDN caching

CDN can be configured to also handle caching.

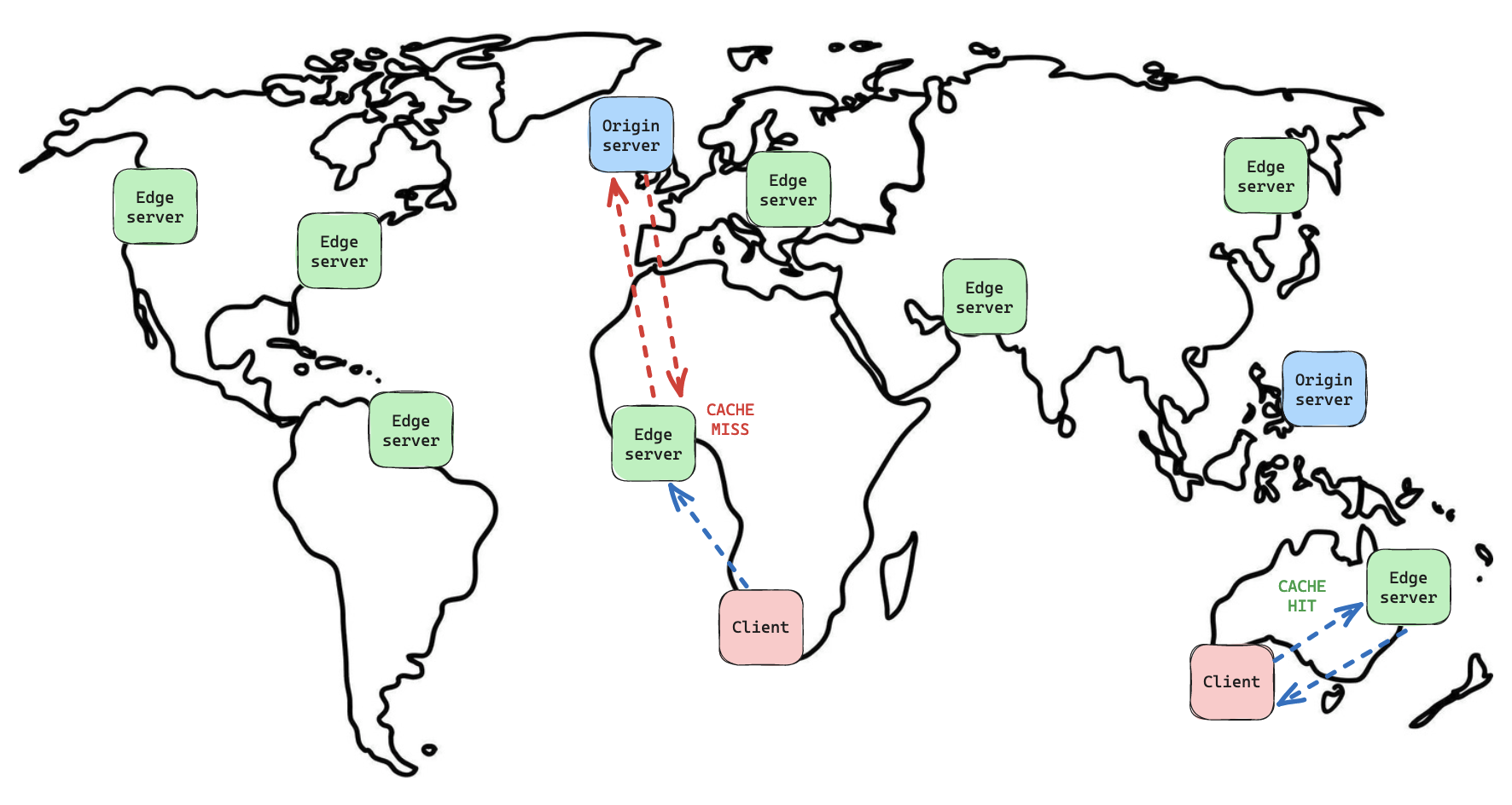

When an Edge server receives a request, it can either:

- Serve the request independently.

- Contact the origin server.

For instance, when you visit the URL my-app.com/profile, the Edge server thinks like this:

- Can I handle this URL request from my cache?

- If yes, do I have an up-to-date version of the response in my cache?

When the Edge server has an up-to-date version in its cache, it will independently handle the request. This is called a cache hit.

A cache hit results in faster speed since the Edge server, being close to the client, simply retrieves the response from its cache and sends it, without any processing.

If the Edge server lacks a recent version in its cache, it will contact the origin server to get the response. This is called a cache miss.

In this scenario, the origin server handles the processing and fulfills the request for the Edge server. Afterward, the Edge server stores that response in its cache for potential future requests.

Edge computing

Certain CDN providers have empowered their Edge servers to not just cache requests but also execute some code. Here are some examples:

When you access the URL my-app.com/profile/user1/image.png:

- The Edge server receives the user1 profile image sized at 10MB.

- It can optimize the image, shrinking it down to 200KB.

- The optimized image is then stored in the cache.

- Finally, the Edge server sends the image to the client.

These edge computing services are offered by various companies:

- AWS Cloudfront has its CloudFront functions

- Cloudflare has it's Workers

- Fastly has its Compute@Edge

Every service varies in how it's implemented and what it can do.

The Edge server can also edit a request before sending it to the origin server. For example:

- When a client requests the URL

my-app.com/profile, - The Edge server examines the request headers and sees that the

Accept-Languageheader is set tozh-CNindicating Chinese language preference. - The Edge server will add

/cnto the requested URL to fetch. - It then delivers and caches the response with the corresponding language.

The Edge server is capable of modifying the response it receives from the origin server before sending it to the client. For example:

- When a client requests the URL

my-app.com/profile/style.css, - The Edge server checks the headers and finds that the

Accept-Encodingheader supports Brotli compression. - It retrieves the response from the origin server.

- Then, it compresses the response using Brotli compression and sends it to the client.

- Finally, it caches the response for future requests.

This way, the Edge server can be programmed to utilize these examples to improve caching and deliver a better user experience.

Serverless computing with Edge servers?

In addition to the examples mentioned earlier, Edge servers can make use of serverless computing.

But what does this mean?

It means that Edge servers can generate a response without relying on an origin server.

In other words, the Edge server can create the HTML for a specific request, cache it, and serve it directly without contacting the origin server.

This is particularly advantageous when you want to utilize serverless architecture. Moreover, this approach can be cost-effective.

Edge servers can be configured to avoid keeping dedicated server processes running for the origins they serve.

Instead, they initiate the server process only when a request is received. This strategy helps conserve CPU and memory resources, as websites with no traffic consume no CPU and memory.

However, initiating the environment to handle the request only upon its arrival can sometimes slow down response speed. This delay is commonly referred to as a "cold start" delay.

Behind the scenes, when a client requests a URL my-app.com/profile, the Edge server needs to activate the Node server process. This process runs the necessary code and serves the HTML for that specific request.

Typically, Edge servers keep these environments active for a certain time after serving the request. This prevents a cold start delay if a similar request comes in the future and requires the same environment.

To minimize the cold start delay, various strategies can be used:

- Cache warming: a script can be programmed to send a request to the Edge server every minute, keeping the cache "warm".

- Provisioned concurrency: Platforms like AWS Lambda offer the option for continuous execution environments, avoiding delays.

- Chrome isolates: Some services, such as CloudFlare workers, run Edge servers in separate execution environments, starting in under 10 milliseconds.

The choice of approach depends on your application architecture and requirements.

Scalability

Utilizing Edge servers for request handling can improve scalability.

This is because CDN providers excel in infrastructure that can expand seamlessly during surges in traffic (eg. SlashDot effect, Black Fridays, etc) and remain operational without any interruptions.

Edge servers can quickly ramp up to handle anywhere from 0 to 1000 requests per second within minutes, while your origin server can remain inactive.

However, this capability shouldn't be taken for granted.

Simply deploying your app on the Edge server won't ensure scalability if any layer of your application fails to scale.

For instance, caching responses from the API or database server on the Edge server is crucial for this. Depending on your application, it can be achieved in a simple or complex manner.

To effectively utilize serverless Edge for request handling, you need to carefully consider various factors:

- The need for speed improvement from Edge server delivery

- The potential impact of cold start delays

- Possible cost savings

- Where other parts of your application are deployed (e.g., API, database)

- Comparison with alternative options (e.g., Platform as a Service, Docker)

- Traffic patterns (spikes or predictable)

Comments ()